metadata接口 & 爬取糗事百科接口技术设计爬取结果整理资源url提取url下载URL分类筛选&清理url最终url数量下载图片配置aria2文件列表aria2配置部署下载Aria2问题下载结果上传备份修复115上传115流式上传开始上传上传速度上传正确性费用

metadata接口 & 爬取

糗事百科接口

fmt.Sprintf("https://circle.qiushibaike.com:443/v2/article/%d/info?count=%d&page=%d", articleID, PAGE_COUNT, commentPage)

- 接口是JSON,id是递增的,所以直接遍历

- 接口会对comment分页,第一页会返回文章具体内容及第一页评论,后面则只返回评论

- 技术选型:Golang → MongoDB

- 逻辑简单,不涉及递归,考虑用golang写

- 纯JSON且大量,用mongoDB这种能直接存json的肯定是更好的选择

技术设计

- 快速发http:

- 之前用Golang的HTTP Client,但是感觉太难用了

- 找到了一个fasthttp库,专门为了高性能发http设计的

- mongoDB使用

- 一般我是用各种ORM的,但是似乎mongo这种JSON结构的东西用了ORM反而会限制操作的空间,所以没用mgm

- 首先爬取第一页,插入数据库,随后把comment append到数据库里面

- 结构设计

- api模块负责具体的HTTP请求

- flow负责管理解析多页的评论,每获取到一页评论调用db的回调

- db负责数据的存储逻辑

爬取结果

- 最后爬完有470w条数据

- 爬取的时候一开始竟然忘了存文章本体

整理资源url

提取url

- 一开始想着找出来哪些json field是需要保存的,然后再解析提取出来

- 太麻烦了

- 然后我想了下,他接口返回的图片、视频似乎都是完整url,我应该直接去regex一下就可以了

- 正则很简单:

"(http:|https:).*?" - 然后配合grep -ohP就可以输出匹配到的url了

- 然而这个json有12G,直接grep太慢了,需要优化

- LC_ALL=C,不解析UTF8

- 查了下怎么并行grep,发现最推荐parallel --pipepart

- parallel有坑,他似乎是把参数传到shell里面跑的,这导致双引号有解析的问题,试了老半天最后找出来下面这种

LC_ALL=C parallel --pipepart -a articles.json grep -ohP "'"'"(http:|https:).*?"'"'" > article_urls.txt

下载URL分类

粗略和查看了一下各个url的分类和pattern

[(b'rk.mbd.baidu.com', 1), (b'ml.mbd.baidu.com', 1), (b'nd.mbd.baidu.com', 1), (b'live.yuanbobo.com', 1), (b'www.cngongji.cn', 1), (b'avatar.52flower.xyz', 1), (b't.cn', 1), (b'test2.app-remix.com', 1), (b'fm.qq.com', 1), (b'test1.app-remix.com', 1), (b'119.145.30.203:8081', 1), (b'4bbbww.bid', 1), (b'pic.yuanbobo.com', 2), (b'remixactivity.qiushibaike.com', 3), (b'avatar.huangzewei.me', 3), (b'nearby.qiushibaike.com', 4), (b'mission.tisykan.xyz', 4), (b'pic.app-remix.com', 6), (b'live.app-remix.com', 6), (b'act.qiushibaike.com', 7), (b'static.qiushibaike.com', 9), (b'circle.qiushibaike.com', 15), (b'qiubai-chick.qiushibaike.com', 18), (b'mission-assets.qiushibaike.com', 28), (b'www.qiushibaike.com', 30), (b'm2.qiushibaike.com', 33), (b'qiubai-im.qiushibaike.com', 82), #(b'avatar-remix.werewolf.mobi', 3999), 无法使用 #(b'avatar.app-remix.com', 30285), 无法使用 #(b'avatar.yuanbobo.com', 34723),可以用 (b'circle-video.qiushibaike.com', 117156), #经筛选,三种url pattern,可以直接下 (b'qiubai-video.qiushibaike.com', 144434), (b'qiubai-video.yitxf.net', 172313), (b'pic.qiushibaike.com', 566037), # 需要处理形如:http://pic.qiushibaike.com/system/pictures/12186/121860593/medium/LCP7ZAGZVD6PHQHP.webp?imageView2/2/w/500/q/80" ''' t = urls_host_dict_uniq[b'pic.qiushibaike.com'] t = [c for c in t if not c.endswith(b'/default_avatar.webp"')] t [c for c in t if not re.findall(br'(medium|thumb)/[0-9]+?.webp"', c)] len([c for c in t if not re.findall(br'(medium|thumb)/[0-9]+?.webp"', c)]) [c for c in t if not re.findall(br'(medium|thumb)/[0-9]+?.webp"', c)] t = [c for c in t if not re.findall(br'(medium|thumb)/[0-9]+?.webp"', c)] t = [c for c in t if not c.endswith(b'/default_avatar_remix.webp"')] t [c for c in t if not '?imageView2' in c] [c for c in t if not b'?imageView2' in c] ''' (b'circle-pic.qiushibaike.com', 3137046)] # 1. "http://circle-pic.qiushibaike.com/luzxoiTlRCO9XfBo4v5i9BPspAWp?vframe/jpg/offset/0" # 对应 http://circle-video.qiushibaike.com/luzxoiTlRCO9XfBo4v5i9BPspAWp, (感觉可以不用管) # 2. "http://circle-pic.qiushibaike.com/B783A0E4A0E93E5600B3372C83E6FE42?imageView2/2/w/600/q/85" 需注意imageView2可能有多次:"http://circle-pic.qiushibaike.com/FqmhEYBy5E4602D06UCIoV9YxEHP?imageView2/2/w/600/q/85?imageView2/2/w/500/q/80"

筛选&清理url

- 去重:提取出来1200w条,但是实际上去重之后只有470w条

- 清理掉无效url:http://11111这种

- 清理掉图片变换的url参数:七牛oss的imageView2、imageMogr2

- 将avatar的几个失效域名替换过来

最终url数量

最后还剩

4191748个url下载图片

配置aria2文件列表

考虑了下怎么把这么多域名的文件存好:

- 去escape url作为文件名并不是个很好的选择,因为:

- url容易造成文件名过长

- 各个域名的数量分布差距悬殊,用url反而不好分类

- 考虑了一下,决定直接把域名都hash一下当做文件名

- 选择了sha1算法,长度40

- 取sha1的前两个字母作为文件夹,将文件存在这个文件夹里

- 参考了下QQ的文件存储架构,是一个目录下面根据文件名的前几个字节来决定文件的存储目录

- 这样文件会均匀、随机的分布在256个文件夹里面,方便所有的操作

- 将419万个文件按照40w一个分片,分成11片

aria2配置

针对小文件做优化 - #file-allocation=prealloc - max-concurrent-downloads=200 - max-connection-per-server=1 - split=3 关闭下载完成脚本(懒得配) - #on-download-complete InputFile参数命令行指定 - #input-file 由于是巨量文件,必须使用deferredinput否则加载时会内存炸,而这个参数和save-session冲突 - #save-session=down.session - #save-session-interval=60 - deferred-input=true

最终配置:

糗事百科备份 aria2最终配置部署下载

- 经过测试一台机器下载大概只有11M左右的带宽,速度堪忧

- 决定用多台机器下,将第一台机器镜像一下,随后保存为启动模板

- 考虑到有11个分片,因此相同配置创建10台,总计11台

Aria2问题

默认使用了环境变量中配置的clash proxy,导致产生大量到本地7890的链接

解决方法:使用AriaNg,手动把all_proxy、http_proxy、https_proxy全部清空

下载结果

总计下载了419万个文件,占用空间1.33TB

上传备份

修复115上传

- 115修改了上传接口,老版的uplb传不上去,提示更新最新版,试了好几种最后算是搞出来了

- 115修改了上传逻辑,不带后缀名的文件传不上去,在OSS上传后会返回提示file_type错误

115流式上传

- 115并不支持流式上传(必须知道文件大小):

- 正常来说,上传需要提供文件的sha1 hash以及文件的大小

- 但经过测试,115传入错误的hash仍然可以上传成功,并且下载成功

- 我们可以将流式输入分片为一个个固定大小的chunk,在最后一个chunk用0字节补齐长度,实现实际上额度流式传输

开始上传

- 调查了一下,百度网盘最大20G,115说是最大115G但是非会员似乎只有5G

- 初步想法:直接传,16G分片

(cd /mnt/down; tar cf - .) | pv -pterbTCB 1G | unbuffer python five_upload_stream.py misty/qiushibaike_pic qiushibaike_pic.tar 16106127360 | tee qiushibaike_upload.log

- 问题1:Python输出的log没法tee

- 肯定是Python的stdout被buffer了,尝试加unbuffer在python前面,但是看到unbuffer有很多坑(-p)

- 发现直接加PYTHONUNBUFFERED=1就可以解决

- 问题2:NFS卡io

- NFS的延迟太高,单线程tar很慢,平均速度只有3MB,备份时间太久

- 因此决定开多线程,但tar并不支持并行读取

- 将备份分为4部分进行

(cd /mnt/down; tar cf - 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f 10 11 12 13 14 15 16 17 18 19 1a 1b 1c 1d 1e 1f 20 21 22 23 24 25 26 27 28 29 2a 2b 2c 2d 2e 2f 30 31 32 33 34 35 36 37 38 39 3a 3b 3c 3d 3e 3f) | pv -pterbTCB 256M | PYTHONUNBUFFERED=1 python five_upload_stream.py misty/qiushibaike_pic qiushibaike_pic_1.tar 5368709120 | tee qiushibaike_upload_1.log (cd /mnt/down; tar cf - 40 41 42 43 44 45 46 47 48 49 4a 4b 4c 4d 4e 4f 50 51 52 53 54 55 56 57 58 59 5a 5b 5c 5d 5e 5f 60 61 62 63 64 65 66 67 68 69 6a 6b 6c 6d 6e 6f 70 71 72 73 74 75 76 77 78 79 7a 7b 7c 7d 7e 7f) | pv -pterbTCB 256M | PYTHONUNBUFFERED=1 python five_upload_stream.py misty/qiushibaike_pic qiushibaike_pic_2.tar 5368709120 | tee qiushibaike_upload_2.log (cd /mnt/down; tar cf - 80 81 82 83 84 85 86 87 88 89 8a 8b 8c 8d 8e 8f 90 91 92 93 94 95 96 97 98 99 9a 9b 9c 9d 9e 9f a0 a1 a2 a3 a4 a5 a6 a7 a8 a9 aa ab ac ad ae af b0 b1 b2 b3 b4 b5 b6 b7 b8 b9 ba bb bc bd be bf) | pv -pterbTCB 256M | PYTHONUNBUFFERED=1 python five_upload_stream.py misty/qiushibaike_pic qiushibaike_pic_3.tar 5368709120 | tee qiushibaike_upload_3.log (cd /mnt/down; tar cf - c0 c1 c2 c3 c4 c5 c6 c7 c8 c9 ca cb cc cd ce cf d0 d1 d2 d3 d4 d5 d6 d7 d8 d9 da db dc dd de df e0 e1 e2 e3 e4 e5 e6 e7 e8 e9 ea eb ec ed ee ef f0 f1 f2 f3 f4 f5 f6 f7 f8 f9 fa fb fc fd fe ff) | pv -pterbTCB 256M | PYTHONUNBUFFERED=1 python five_upload_stream.py misty/qiushibaike_pic qiushibaike_pic_4.tar 5368709120 | tee qiushibaike_upload_4.log (cd /mnt/down; tar cf - logs) | pv -pterbTCB 256M | PYTHONUNBUFFERED=1 python five_upload_stream.py misty/qiushibaike_pic qiushibaike_downlogs.tar 5368709120 | tee qiushibaike_upload_downlogs.log

- 问题3:115服务器不稳定

Traceback (most recent call last): File "five_upload_stream.py", line 110, in <module> main() File "five_upload_stream.py", line 103, in main ret = pan.finish_upload(task.extra_data, responses) File "/home/misty/unipan/unipan/../pan_mods/five_pan/five_pan/pan.py", line 370, in finish_upload -----------RES----------- 203 Non-Authoritative Information Connection: close Content-Length: 298 Content-Type: application/xml Date: Wed, 28 Dec 2022 13:16:54 GMT Etag: "78C21C5927DAA29D883FCB7EFE380FF3" Server: AliyunOSS X-Oss-Hash-Crc64ecma: 14722912621785675438 X-Oss-Hash-Func: SHA-1 X-Oss-Hash-Sha1: BD3798026202D57F33B942E148683A610058A7D5 X-Oss-Hash-Value: BD3798026202D57F33B942E148683A610058A7D5 X-Oss-Request-Id: 63AC3C1057B5103037BD3D29 X-Oss-Server-Time: 87996 [u'<?xml version="1.0" encoding="UTF-8"?>\n<Error>\n <Code>CallbackFailed</Code>\n <Message>Error status : -1.OSS can not connect to your callbackUrl, please check it.</Message>\n <RequestId>63AC3C1057B5103037BD3D29</RequestId>\n <HostId>fhnfile.oss-cn-shenzhen-internal.aliyuncs.com</HostId>\n</Error>\n'] ret = json.loads(responses[0]) File "/opt/pyenv/versions/2.7.18/lib/python2.7/json/__init__.py", line 339, in loads return _default_decoder.decode(s) File "/opt/pyenv/versions/2.7.18/lib/python2.7/json/decoder.py", line 364, in decode obj, end = self.raw_decode(s, idx=_w(s, 0).end()) File "/opt/pyenv/versions/2.7.18/lib/python2.7/json/decoder.py", line 382, in raw_decode raise ValueError("No JSON object could be decoded") ValueError: No JSON object could be decoded

解决:接着分一次4个分片:

(cd /mnt/down; tar cf - 00 01 02 03 04 05 06 07 08 09 0a 0b 0c 0d 0e 0f) | pv -pterbTCB 256M | PYTHONUNBUFFERED=1 python five_upload_stream.py misty/qiushibaike_pic qiushibaike_pic_1_1.tar 5368709120 | tee qiushibaike_upload_1_1.log (cd /mnt/down; tar cf - 10 11 12 13 14 15 16 17 18 19 1a 1b 1c 1d 1e 1f) | pv -pterbTCB 256M | PYTHONUNBUFFERED=1 python five_upload_stream.py misty/qiushibaike_pic qiushibaike_pic_1_2.tar 5368709120 | tee qiushibaike_upload_1_2.log (cd /mnt/down; tar cf - 20 21 22 23 24 25 26 27 28 29 2a 2b 2c 2d 2e 2f) | pv -pterbTCB 256M | PYTHONUNBUFFERED=1 python five_upload_stream.py misty/qiushibaike_pic qiushibaike_pic_1_3.tar 5368709120 | tee qiushibaike_upload_1_3.log (cd /mnt/down; tar cf - 30 31 32 33 34 35 36 37 38 39 3a 3b 3c 3d 3e 3f) | pv -pterbTCB 256M | PYTHONUNBUFFERED=1 python five_upload_stream.py misty/qiushibaike_pic qiushibaike_pic_1_4.tar 5368709120 | tee qiushibaike_upload_1_4.log

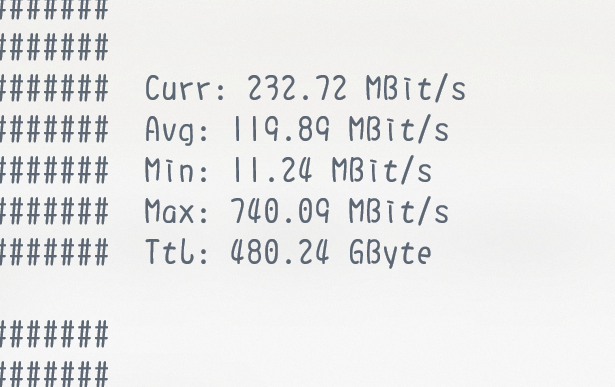

上传速度

- 经过优化,nload数据如下

- 单机器平均上传速度100Mbps,约13MB

- 实测100G传输需要约两个半小时

- 折合1200G需要40小时,即两天

上传正确性

- 服务器:

find . -type f -print | xargs sha1sum

- 本地:

find . -type f -print | parallel sha1sum

验证解压前正确性:通过

5ae08025e03b0678434efdf0c1783f510623b6c0 *./qiushibaike_downlogs.tar.00001 5AE08025E03B0678434EFDF0C1783F510623B6C0 TRUE a4bcb8c19acd3e401d8d21469cbb88069978f8f2 *./qiushibaike_downlogs.tar.00002 A4BCB8C19ACD3E401D8D21469CBB88069978F8F2 TRUE ba217f19a45299b1d800b1dda50f1b4418103ff0 *./qiushibaike_downlogs.tar.00003 BA217F19A45299B1D800B1DDA50F1B4418103FF0 TRUE d8afa4ebfc79f7da20543aa1a07977f9da0c0015 *./qiushibaike_downlogs.tar.00004 D8AFA4EBFC79F7DA20543AA1A07977F9DA0C0015 TRUE 621844da554c9bb96a912448dbf2e0560aea3471 *./qiushibaike_downlogs.tar.00005 621844DA554C9BB96A912448DBF2E0560AEA3471 TRUE 70373b8445aa3a0fcbe785f871ddf1ff422b9b58 *./qiushibaike_downlogs.tar.00006 70373B8445AA3A0FCBE785F871DDF1FF422B9B58 TRUE 9e53dc6c490692ea407b7da1ceb5112a3242ace6 *./qiushibaike_downlogs.tar.00007 9E53DC6C490692EA407B7DA1CEB5112A3242ACE6 TRUE 8351bfe4d26755cfa01fdaee17f7bf7aac8d04b8 *./qiushibaike_downlogs.tar.00008 8351BFE4D26755CFA01FDAEE17F7BF7AAC8D04B8 TRUE 3c5150ac4b806888dd368aed1637c848f2aeb88a *./qiushibaike_downlogs.tar.00009 3C5150AC4B806888DD368AED1637C848F2AEB88A TRUE 235d2889e74077fe74df9a6a64c7f58f80c2d639 *./qiushibaike_downlogs.tar.00010 235D2889E74077FE74DF9A6A64C7F58F80C2D639 TRUE ba7cdeacdea0a8c428a2f942c3c208ff193c2486 *./qiushibaike_downlogs.tar.00011 BA7CDEACDEA0A8C428A2F942C3C208FF193C2486 TRUE 5d479d218442740b42818337ba16d82a89c64424 *./qiushibaike_downlogs.tar.00012 5D479D218442740B42818337BA16D82A89C64424 TRUE 61ad50382fb49c6e65413798767d96ade75633a2 *./qiushibaike_downlogs.tar.00013 61AD50382FB49C6E65413798767D96ADE75633A2 TRUE 581e064cf0897c58d64e5b6211f99177074f2359 *./qiushibaike_downlogs.tar.00014 581E064CF0897C58D64E5B6211F99177074F2359 TRUE 98bb2817f6f84d29f036f0ad17107a4dae6954e5 *./qiushibaike_downlogs.tar.00015 98BB2817F6F84D29F036F0AD17107A4DAE6954E5 TRUE 05c62cd4d65230f074b5b537be5fbec8ea316358 *./qiushibaike_downlogs.tar.00016 05C62CD4D65230F074B5B537BE5FBEC8EA316358 TRUE 57c7ded6724570bcbfebaec2e8116c852237dff1 *./qiushibaike_downlogs.tar.00017 57C7DED6724570BCBFEBAEC2E8116C852237DFF1 TRUE 163f1908715bda0035444b0052007094adc008cd *./qiushibaike_downlogs.tar.00018 163F1908715BDA0035444B0052007094ADC008CD TRUE 1e376f9d550aa9ba0ba3b75e5c2f663f8052d779 *./qiushibaike_downlogs.tar.00019 1E376F9D550AA9BA0BA3B75E5C2F663F8052D779 TRUE da236ea8a33e7456d47597304fda71cdfe58ce90 *./qiushibaike_downlogs.tar.00020 DA236EA8A33E7456D47597304FDA71CDFE58CE90 TRUE 7653d2ba39461daed6488d15c072ed73aa81634d *./qiushibaike_downlogs.tar.00021 7653D2BA39461DAED6488D15C072ED73AA81634D TRUE 59f32b2536c58a48d5cd67c636b7b39cc759c590 *./qiushibaike_downlogs.tar.00022 59F32B2536C58A48D5CD67C636B7B39CC759C590 TRUE 403418e6631bbbdf64797245ee693217c7eb1122 *./qiushibaike_downlogs.tar.00023 403418E6631BBBDF64797245EE693217C7EB1122 TRUE acf93e4d84b904346c4abf46e62140b93f1ca64a *./qiushibaike_downlogs.tar.00024 ACF93E4D84B904346C4ABF46E62140B93F1CA64A TRUE 7e5bad26f9c5887ef93e5251b6eef3b4971355c0 *./qiushibaike_downlogs.tar.00025 7E5BAD26F9C5887EF93E5251B6EEF3B4971355C0 TRUE 1704477096245b4d32e8dd6d26c91d002fc070f4 *./qiushibaike_downlogs.tar.00026 1704477096245B4D32E8DD6D26C91D002FC070F4 TRUE 4b0cbce7a816268b8ea39d7700636528bf5bf570 *./qiushibaike_downlogs.tar.00027 4B0CBCE7A816268B8EA39D7700636528BF5BF570 TRUE

验证解压后正确性:通过

cd4b65ab28e15079cfa537a09548cf4c91181584 *./down_qiushibaike1.log cd4b65ab28e15079cfa537a09548cf4c91181584 ./down_qiushibaike1.log TRUE 68899dd932017eb65d5e33ae40c7a7947979c131 *./down_qiushibaike10.log 68899dd932017eb65d5e33ae40c7a7947979c131 ./down_qiushibaike10.log TRUE 7d8a8238cc694ff1254de5d15f71855cf42b0b4d *./down_qiushibaike11.log 7d8a8238cc694ff1254de5d15f71855cf42b0b4d ./down_qiushibaike11.log TRUE 844596da61e2c2d3273eb0bbd78b4bafb5fd48e2 *./down_qiushibaike2.log 844596da61e2c2d3273eb0bbd78b4bafb5fd48e2 ./down_qiushibaike2.log TRUE 6f133f4302bac8517bed0a4a03e111a12b5cb12d *./down_qiushibaike3.log 6f133f4302bac8517bed0a4a03e111a12b5cb12d ./down_qiushibaike3.log TRUE 845b5da32d32082589a46f1631bea975fd855f80 *./down_qiushibaike4.log 845b5da32d32082589a46f1631bea975fd855f80 ./down_qiushibaike4.log TRUE f77633ce44d58d34fecf7485af389d81e7ca5d51 *./down_qiushibaike5.log f77633ce44d58d34fecf7485af389d81e7ca5d51 ./down_qiushibaike5.log TRUE 4553da24f13e06fa1c33819b8da22db790575db4 *./down_qiushibaike6.log 4553da24f13e06fa1c33819b8da22db790575db4 ./down_qiushibaike6.log TRUE 786617f456ccf147f043f7355d95072239283549 *./down_qiushibaike7.log 786617f456ccf147f043f7355d95072239283549 ./down_qiushibaike7.log TRUE a71defd0181570d8e43ae5b79fd8d9704bed085d *./down_qiushibaike8.log a71defd0181570d8e43ae5b79fd8d9704bed085d ./down_qiushibaike8.log TRUE 826450b90c194597ca978fa2b928df5a54ef9464 *./down_qiushibaike9.log 826450b90c194597ca978fa2b928df5a54ef9464 ./down_qiushibaike9.log TRUE

费用

- 阿里云:约40元

- 腾讯云:约40元